Since the release of ChatGPT by OpenAI, discussions around generative AI have become widespread but often confusing and issue of trust in Ai. Amidst the debates about its nature and impact, the focus for three-quarters of Americans is whether AI can be trusted. Seattle-based studio Artefact, dedicated to responsible design, sees this as the crucial question. They believe that trust in generative AI can be fostered through transparency, integrity, and agency.

Transparency:

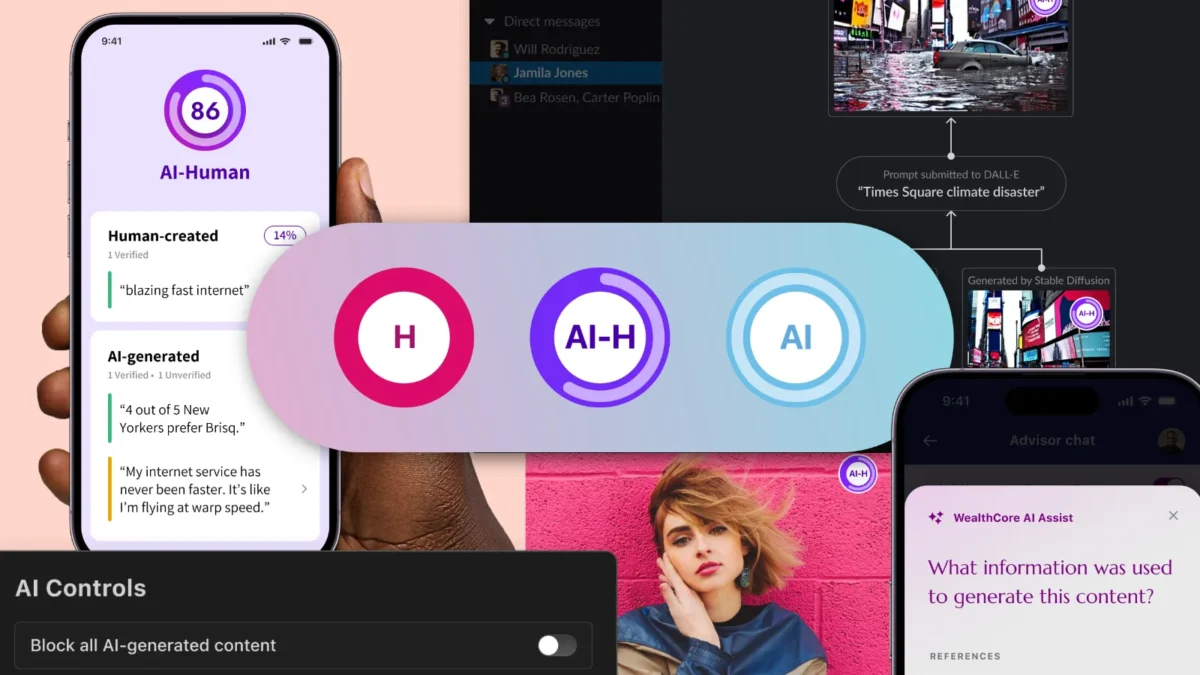

Inform at a Glance To establish trust, it is essential to know when generative AI is at play. Artefact has designed a standardized badge system inspired by content ratings and nutrition labels. The badges categorize content as H (100% human-generated), AI (100% machine-generated), or AI-H (a combination of human and machine-generated). The AI-H badge visually represents the ratio of human to machine-made content. A blue hue signifies trust, intensifying as the content becomes more AI-driven. By clicking or tapping the badge, users can access additional information, ensuring transparency.

Integrity:

Dig into Details Detecting the presence of AI is one thing; evaluating the content’s integrity is another. Artefact envisions a personal finance app where human adviser chats are intertwined with AI-generated recommendations. To address concerns about authenticity, Artefact introduces a series of nested prompts. A toggle separates machine-generated text from human commentary, enabling users to delve into the AI’s provenance and processes. Verified information sources, language-model specifications, and potential conflicts of interest are presented, fostering trust through deeper understanding.

Agency:

Control the Noise Excessive information and misinformation are stress-inducing aspects of digital life, amplified by AI. Artefact proposes a noise-canceling filter for machine-generated content, granting users control. Similar to managing mobile notifications, an interface resembling iOS Settings allows app-specific AI preferences. Users can customize their exposure to machine-generated content effortlessly. Combining these design patterns creates mundane encounters with generative AI, establishing trust. A Slack interaction showcases the seamless integration of the AI-H badge, deeper exploration options, and app- and OS-level filters to assess content.

The Path Forward:

While these designs serve as provocations, implementing them necessitates technical and regulatory infrastructure. Establishing authorities similar to the FDA, FCC, or MPAA for generative AI would lend credibility to the badges. Encouraging businesses to adopt trackable metadata for AI-generated content enables comprehensive provenance exploration. Furthermore, OS-level preference panes can provide control over AI encounters. Artefact aims to spark concrete discussions and actions within organizations, businesses, and governments, ultimately driving the necessary changes to build trust in AI.